26 May 2008

Matt Ball recently pointed out an article coming to press at the Yale Journal of Law and Technology, titled “Government Data and the Invisible Hand”. Since I just got back from San Francisco, where I gave a talk titled “Robocop: Public Service in the Internet Age”, it feels like the universe is vibrating on a particular wave length right now, saying

“free your data, let the world play in your garden!”

My talk was itself organized around the ideas presented in the UK “Power of Information” report, which I learned about from a high level civil servant in the BC government.

Vibrate, universe, vibrate!

Coles notes of my talk:

- public service

- on the internet

- is a new medium

- it requires a new approach

- serve online communities

- serve alternative users of information

- provide open access to your information

- remove policy barriers

- expect re-use, encourage it

- use standard formats

- become part of the internet

26 May 2008

Those not on the PostGIS users mailing list will have missed an interesting thread that pulled together some good comments from both the usual suspects and some unexpected participants.

Some choice quotes:

-

“Yes, using SDE effectively castrates the spatial database. It still walks and talks, but it’s a shell of the man it was before. “* – yours truly

-

“The ‘economical’ route for those that want to use PostGIS and have edit capabilities inside ArcGIS desktop would be to use the ZigGIS professional which (from my understanding) implements an editable PostGIS layer in ArcGIS desktop.”* – Rob Tester

-

“If you compare [pricing] with Oracle then yes probably so… But honestly if you are talking about SQL Server vs. PostgreSQL, I think the savings you get from running PostgreSQL would be dwarfed by the cost of SDE.”* – Regina Obe

-

“Spatial databases are being commoditized, but SDE is not a spatial database, it’s a revenue enhancer for ArcMap, and ArcMap is not being commoditized.”* – yours truly

-

“A few disclaimers and background : Until last year, I worked at ESRI. I contributed with some of bug fixes for the PostgreSQL/PostGIS support in the ArcObjects side of things.”* – Ragi Burhum (read this one!)

-

“There is one thing to note in a mixed-client environment and that is this: if a non-ESRI client writes an invalid geometry to a the database then when ArcSDE constructs a query over an area that includes this feature ArcSDE will discover the error (as it passed all queried features into its own shape checking routines) and stop the query”* – Simon Greener (read this one!)

I’m thinking of becoming an instigator. Apparently there’s money in p***ing people off.

14 May 2008

Elephant crushes dolphin, but dolphin drowns elephant?

More folks doing real work needing a real spatial database, this time RedFin a real estate information company.

Specifically, we were having some major performance problems with queries that were constrained by both spatial and numeric columns, and all of our attempts to squeeze more performance out of MySQL (including hiring expensive outside consultants) had come to naught.

Guys, if you’re going to hire expensive outside consultants to help with your spatial database problems, they should be (a) me and (b) conversant in PostGIS! :)

Update: It is worth explaining that their main problem, the slow results when the spatial clause was weak, is a direct result of MySQL having an inferior query planner. PostGIS is fast because PostGIS provides good statistics on spatial index selectivity to the planner, and the PgSQL planner is flexible enough to accept selectivity information from extended types. I know this is a big performance problem because before PostGIS had a decent selectivity system, performance bottlenecks like RedFin’s happened all the time.

13 May 2008

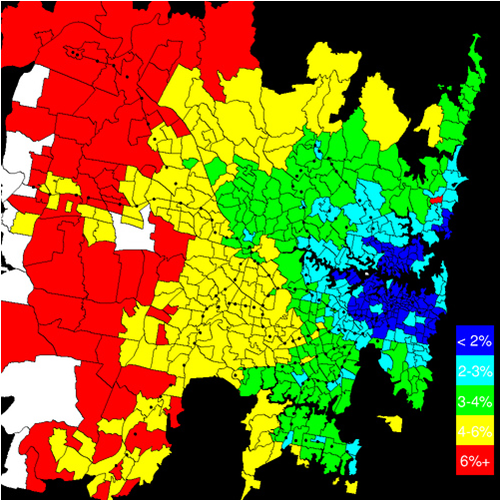

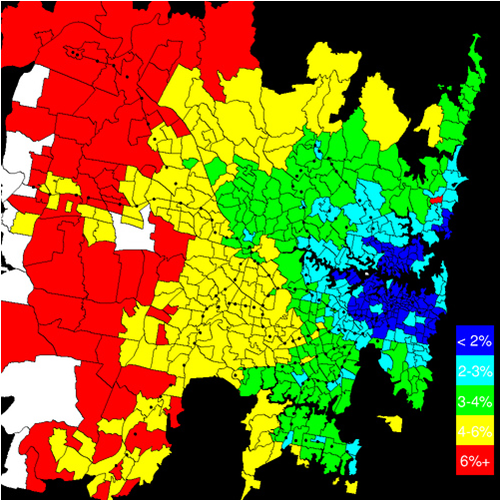

My purchase of a house in central Victoria eight years ago just keeps getting better and better (I can walk everywhere I want to go, daily amenities within 500m, all of downtown within 2km). Paul Krugman posts on a map from the Sydney Morning Herald, showing the expected proportion of family income that will go to petrol as prices rise.

I will refrain from gloating to my colleagues in exurban Atlanta and Phoenix. Except this probably counts. Oh well, in for a penny, in for a pound: suckers!

I wonder if David Brooks will recant his (surprisingly recent) love letter to the exurb. James Howard Kunstler hasn’t been that good at predicting the future, but his take on Atlanta from five years ago is looking more and more spot on.

13 May 2008

Props to Chris Holmes, posting about the work going into making the next version of Geoserver fully crawleable (not a real word! (yet!)) by web spiders, right in synch with Jack Dangermond’s announcement of the same feature coming in ArcServer.

The government here in British Columbia is moving haltingly towards a more service-oriented approach to spatial data publishing, and this has included a strong awareness that being consumable by Google tools, and spidered by search engines is key to improving general accessibility. If the citizen’s first stop in searching for information is Google or MSN, it makes sense to spend some effort ensuring your relevant information is properly exposed via those services.

Free business opportunity: establish button-down search engine optimization firm targeted exclusively at government clients.

Now, why is Google sharing the stage with ESRI? ESRI needs Google’s stamp of approval a lot more than Google needs ESRI’s – what’s in it for Google? Is the Google worried that Virtual Earth is doing better cultivating government application development opportunities than the Google suite? Google plays well with the hacker set, but is a harder sell with the suits than tried-and-true Microsoft, particularly in a big, conservative organizations.