06 Feb 2006

I got home from Chicago this afternoon, and though I am a little loopy from travel, I had better write something down before the moment is gone.

It was a very interesting experience to have all these open source people in the same room at the same time. While I had met all of them (with the exception of a couple of Autodesk folks) at different times and places, this was the first time that particular combination of people were all in the same place at one.

The discussion was good, and surprisingly on point the whole time, which is great for a group of 25 opinionated people. Gary Lang of Autodesk did an excellent job ensuring that all the important topics were covered, while letting the discussion move at more-or-less its own pace. The IRC channel was a great forum for carrying on parallel discussions to the verbal points being made. Rather than interrupting a speaker, folks could jot a point into IRC, and if it was trenchant enough it would come up verbally shortly anyways. An interesting example of a meld of old and new forms of discourse.

The summaries at Directions Magazine and Mapping Hacks cover the details of decisions quite well, so I won’t go over that ground again.

One thing that the recaps do not cover is that I was under some pressure on a couple of occasions to bring PostGIS (and to a lesser degree uDig) to the Foundation right away. I recognize that PostGIS is considered a core piece of the open source GIS product stack right now, and that is what is generating the pressure – the desire to have all the “big guns” under the Foundation roof right away. But we have spent a lot of effort to get PostGIS to where it is, and I am not inclined to jump into the Foundation without giving a good deal of thought about the benefits and risks.

As I talked about a couple months ago, our open source work is a company calling card with respect to our abilities: our abilities with the products we make; and our abilities in general. Our projects let potential clients understand the quality of our work and and our enthusiasm, even if they have never met us or even heard of us before: the projects are tangible proof that we are a good company. If we move our projects into the Foundation, the direct link between the projects and ourselves gets severed. Now they are Foundation projects, not Refractions projects, and the “halo” effect is somewhat diminished. Sure, smart folks who read the email lists or the commit logs will figure out that we are doing the work, but that is a long ways from the current fairly obvious linkages between the projects and the company.

The flip side is that taking the projects away from the (one, smallish) company and into the Foundation (with Big Important Companies as funders and backers) adds a new halo of legitimacy to the projects themselves. And therein lies the calculation. Does making the projects look better help us enough to offset the loss of direct project/company linkage?

I do not know.

One of the things I will be doing is talking to people who have some distance from the whole issue, and see how they perceive both ourselves, our projects, and the foundation. I certainly do not have enough distance from the issues to have an undistorted view of the issue, and neither do the people in Chicago who wanted us to join right away. So it will take some time and some thought.

Strangely, it seemed that we were the only organization with this particular quandry. Autodesk actually wants to break the linkage between their open source project and their company. Most of the other projects are classic multi-individual projects that came into being without corporate gestation. One exception is ka-map, which was gestated by DM Solutions, and will eventually put them into the same should we/shouldn’t we cycle as I am in now. But ka-map is still a new project, so people were not pressuring them to jump into the pool right out of the gate.

15 Dec 2005

James Fee’s latest posting about his experiences with ESRI technical support has unleashed a torrent of responses, most of which agree that the ESRI developer experience has gone downhill over the last few years, but some of which are downright surreal in their defence of the GIS giant:

ESRI should pull your blog off of the EDN page. If you don’t like ESRI then leave, don’t come to the Developer Summit and stop posting about ESRI.

– Kristina Howard

Wow! Go back to Russia, James, you commie freak!

This is a little off the deep end for brand loyalty. After all, James and the other people commenting on his blog are expressing dissatisfaction with a commercial service they paid a lot of money for. They aren’t bitching about their politicians, or the weather.

Ironically, a number of people commenting (yes, including me) are pointing out that the service level in the open source world, where much of the support is completely free, is far higher. My personal point is that if you go one step further and even pay for support in the open source world, it can be beyond exemplary, certainly far better than any large software company can hope to provide with phone banks and Teir 1, 2, 3 service levels.

14 Oct 2005

I recently had an opportunity to perform an experiment of sorts on an open source community, the PostGIS community. The hypothesis was: an open source community would contribute financially to a shared goal, to directly fund development, with multiple members each contributing a little to create a large total contribution. Note that this is different from a single community member funding a development they need (and others might also need but not pay for). This is a number of entities pitching in simultaneously, and really is the preferred way (I think) to share the pain and share the gain.

The story started with a post from Oleg Bartunov on the PostgresSQL hackers list:

I want to inform that we began to work on concurrency and recovery support in GiST on our’s own account and hope to be ready before 8.1 code freeze. There was some noise about possible sponsoring of our work, but we didn’t get any offering yet, so we’re looking for sponsorship! Full email…

The original noise about sponsorship had actually come from me, about 18 months earlier, when it became clear that the full table locks in the existing GiST index code were going to be a future bottleneck. So, I felt obligated to follow up! Here was a chance to see open source “values” in action: everyone would benefit from this improvement, so surely when given the opportunity to help fund it and ensure it came to fruition, contributors would leap to the fore. I wrote to postgis-users:

Oleg and Teodor are ready to attack GiST concurrency, so the time has come for all enterprise PostGIS users to dig deep and help fund this project … Refractions Research will contribute $2000 towards this development project, and will serve as a central bundling area for all funds contributed towards this project. As such, we will provide invoices that can be provided to accounts payable departments and cash cheques on North American banks to get the money oversees to Oleg and Teodor. No, we will not assess any commissions for this: we want to see this done. Full email…

The response was… Underwhelming. There is nothing like a sense of urgency to provoke motion. The problem is analogous to standing in front of a mass of people with an unpleasant task and yelling “any volunteers?” Everyone waits for someone to step else forward. As the silence stretches out, the pressure builds and eventually volunteers step forward. On an email list, though, no one can hear the silence! My second email was an attempt to make the silence palpable:

Just an update. There are 700 people on this mailing list currently. As of this morning, I have received zero (0) responses on this. Full email…

At this point the dam finally broke and multiple companies came forward to contribute. Some contributors were companies that use PostGIS in their operations and could actually anticipate the future need for improved concurrency. This was classic enlightened self-interest, and my only disappointment was that such a relatively small cross-section of the PostGIS user community was capable or interested in seeing the long term benefit of the short term investment.

Other contributors signed on purely because it was the “right thing to do”. This was also a form of enlightened self-interest, but one with a much broader view of future personal benefit. The best example of this was Cadcorp, a spatial software company from the UK. Cadcorp makes proprietary GIS software. Cadcorp does not even use PostGIS themselves (except for testing). But, their clients do use PostGIS, and their product can act as a PostGIS data viewer and editor. Cadcorp invested in improving PostgreSQL, because it would improve PostGIS, which would improve their clients experience of using their software with PostGIS, which would (in the long view) enhance the value of their software. Now that is seeing the big picture.

The final totals were both quite good (about $8000 total contributions, with no individual contribution exceeding $2000) and not so good (only 9 contributing companies given 700+ members on the postgis-users mailing list).

The final technical results were great! Index recovery from system crashes now works transparently, and performance under concurrence read/write does not scale into a brick wall anymore.

I am pleased that this work got done, but I think the experience has taught me a few things about drumming shared contributions out of the community:

- A sense of urgency is required. The work had to be done before the PostgreSQL 8.1 release, or a whole release cycle would go by before we had an opportunity to get this into PostgreSQL again.

- A visible target would help. Having a “United Way thermometer” would probably have been a good thing. Having a fixed funding target would also help.

- A well-written prospectus helps. I did not have one to start, but did one up and it was used by a number of technical community members to get approval from their non-technical managers.

- A thick skin would help (I don’t have one). The number of people who will free-ride is very high, and even some very deep pocketed organizations will free-ride. This is deeply annoying when you see individuals and smaller organizations coming to the table generously, but there is nothing to be done about it.

So now we are done, the press release is written, and hopefully we will have an article about this on Newsforge soon!

09 Oct 2005

Web services are a “big deal” these days, garnering lots and lots of column inches in mainstream IT magazines. The geospatial world is no exception: after years of stagnation the number of number of Open Geospatial Consortium (OGC) web services publicly available on the internet is starting to really explode, going from around 200 to 1000 over the last nine months.

Great news! Except… now we have to find the services so we can use them in clients. The web services mantra is “publish, find, bind”. “Publish” is going great guns, “bind” is working well with good servers and clients, but “find”… now that is another story.

The OGC has a specification for a “Catalogue Services for the Web” (CSW), which is supposed to fill in the “find” part, but:

- It is 180 pages long;

- You also need a “profile” for services, another 40 pages; and,

- The profile catalogues information at a “service” level, rather than at a “layer” level.

The OGC specification is designed to handle a large number of use cases, most of which are irrelevant to spatial web services clients currently in action. By designing for the future, they may have foreclosed on the present, because there are much easier ways to get at catalogue information than via CSW.

For example, if you want a quick raw listing of spatial web services, try this link:

http://www.google.ca/search?q=inurl%3Arequest%3Dgetcapabilities

Instant gratification, and you did not have to read a single page of the CSW standard!

With a little Perl, some PostgreSQL and PostGIS you can turn the results of the above into a neat layer-by-layer database and allow people to query it with a very simple URL-based API:

http://udig.refractions.net/search/google-xml.php?keywords=robin&xmin=-180&ymin=-90&xmax=180&ymax=90

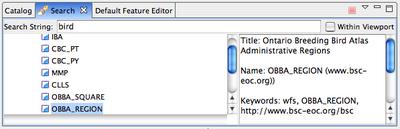

This kind of simple catalogue access became a clear necessity while we were building uDig – there were no CSW servers available with a population of publicly available services, and in any event the CSW profiles did not allow us to search for information by layer, the fundamental unit of interactive mapping.

By using this simple API and properly populated catalogue, we turned uDig into a client that implemented all the elements of the publish-find-bind paradigm, and exposed them with a drag-and-drop user interface. A user types a keyword into the uDig search panel, selects a result from the return list, and drags it into the map panel, where it automatically loads.

So where does this leave the OGC catalogue effort? Still waiting for clients. There are server implementions from the vendors who sponsored the profile effort, but the client implementations are the usual web-based show’n’tell clients, not clients cleanly integrated into actual GIS software. All web services standardization efforts have a chicken-and-egg quality to them, as the network effects of the standard do not become apparent until a critical mass of deployed clients can hit a critical mass of populated servers. Both halves of the equation are required: useful clients, and useful servers.

09 Oct 2005

I will write a technically oriented entry eventually, but right now there are some business issues I still want to explore!

Because most open source proponents are developers themselves, they tend to cast the adoption problem in terms of technical features. The theory goes: “once a product achieves a particular feature level, it will be rapidly adopted, because it is better”. This view has a couple of problems, in that:

- it ignores switching costs entirely (both monetary and emotional); and,

- it assumes the open source product can provide every feature required by the market.

Switching costs are almost insurmountable, regardless of the technology, and regardless of whether the alternative is proprietary or open source. Generally speaking, once a piece of technology is deployed, it is not going anywhere, until it hits end of life. Exceptions abound, of course, but it is a good rule of thumb.

Looking at the open source deployment success stories, most back up this theory: Linux and Apache grew like crazy in green fields, they were rarely replacing existing systems, they were filling in gaps and providing new capability. Again, exceptions abound, but keep your eye on the larger picture.

Feature completeness is a different beast, and it is where open source has a hard time competing with proprietary. Once an organization has gotten over the switching hump, for whatever reason, they have to decide what to switch to. They tot up the lists of features they “need”, and then put out a request to vendors to propose products that meet their list of needs. Bzzt! Strike one for open source, there is no dedicated vendor! Which means either the organization themselves needs to take the initiative to bring open source to the table, or some other company who sees a side opportunity to make money has to bring it in.

Now they compare their feature “needs” with the products. Almost invariably, no product meets 100% of the needs, but that is OK, because vendors can provide a “roadmap” of future development, which hopefully covers their requirements.

Bzzt! Strike two for open source. Open source projects generally make no promises about particular features by particular dates. At best a wish list of future features (“subject to interest and time”) is provided. So open source projects which provide only partial coverage of requirements are rejected. Do not pass go, do not collect any dollars.

The problem is that the technology evaluation and standardization process in many large organizations has been shaped over the years to optimally match the properties of the existing proprietary software market:

- products are provided “finished”, for an up front purchase price;

- products are marketed by vendors, they do not exist in isolation;

- products are owned and supported by a single organization;

- the onus is on the vendor to supply most of the effort in proving the worth of the product (“respond to the following 256 categories in the Request for Proposals”), not the client to evaluate the product independently.

For an organization to start to make optimal use of open source, habits developed over decades have to be stood on their head:

- products are only as good as the client makes them (through the strategic application of money or effort);

- great products must be sought out by the client;

- support may be provided by many vendors, or by the client itself, if their pockets are deep enough to hire a pool of expertise;

- the onus is on the client to evaluate the worth of the product.

But that hardly seems like an improvement! Now the client is doing way more work! And that is a big hurdle in getting people to invert their thinking about product evaluation and adoption. In order to reap the privilege of receiving software without licensing costs, they must shoulder more responsibility for their own technological health. With great privileges come great responsibilities.