02 Feb 2023

Last week a user noted on the postgis-users list (paraphrase):

I upgraded from PostGIS 2.5 to 3.3 and now the results of my coordinate transforms are wrong. There is a vertical shift between the systems I’m using, but my vertical coordinates are unchanged.

Hmmm.

PostGIS gets all its coordinate reprojection smarts from the proj library. The user’s query looked like this:

SELECT ST_AsText(

ST_Transform('SRID=7405;POINT(545068 258591 8.51)'::geometry,

4979

));

“We just use proj” is a lot less certain and stable an assertion than it appears on the surface. In fact, PostGIS “just uses proj” for proj versions from 4.9 all the way up to 9.2, and there has been a lot of change to the proj library over that sweep of releases.

- The API radically changed around proj version 6, and that required a major rework of how PostGIS called the library.

- The way proj dereferenced spatial reference identifiers into reprojection algorithms changed around then too (it got much slower) which required more changes in how we interacted with the library.

- More recently the way proj handled “transformation grids” changed, which was the source of the complaint.

Exploring Proj

The first thing to do in debugging this “PostGIS problem” was to establish if it was in fact a PostGIS problem, or a problem in proj. There are commandline tools in proj to query what pipelines the system will use for a transform, and what the effect on coordinates will be, so I can take PostGIS right out of the picture.

We can run the query on the commandline:

echo 545068 258591 8.51 | cs2cs 'EPSG:7405' 'EPSG:4979'

Which returns:

52d12'23.241"N 0d7'17.603"E 8.510

So directly using proj we are seeing the same problem as in PostGIS SQL: no change in the vertical dimension, it goes in at 8.51 and comes out at 8.51. So the problem is not PostGIS, is is proj.

Cartographic transformations are nice deterministic functions, they take in a longitude and latitude and spit out an X and a Y.

(x,y) = f(theta, phi)

(theta, phi) = finv(x, y)

But not all transformations are cartographic transformations, some are transformation between geographic reference systems. And many of those are lumpy and kind of random.

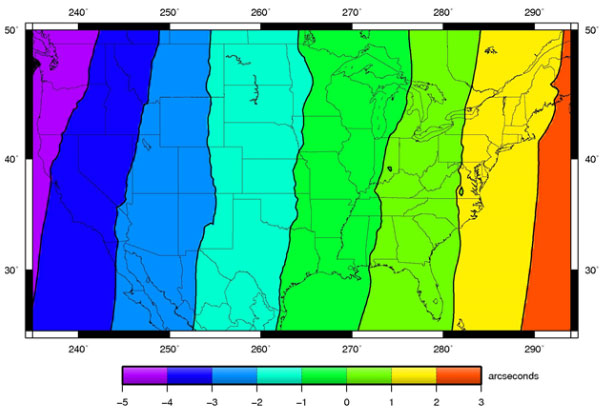

For example, the North American 1927 Datum (NAD27) was built from classic survey techniques, starting from the “middle” (Kansas) and working outwards, chain by chain, sighting by sighting. The North American 1983 Datum (NAD83) was built with the assistance of the first GPS units. The accumulated errors of survey over distance are not deterministic, they are kind of lumpy and arbitrary. So the transformation from NAD27 to NAD83 is also kind of lumpy and arbitrary.

How do you represent lumpy and arbitrary transformations? With a grid! The grid says “if your observation falls in this cell, adjust it this much, in this direction”.

For the NAD27->NAD83 conversion, the NADCON grids have been around (and continuously improved) for a generation.

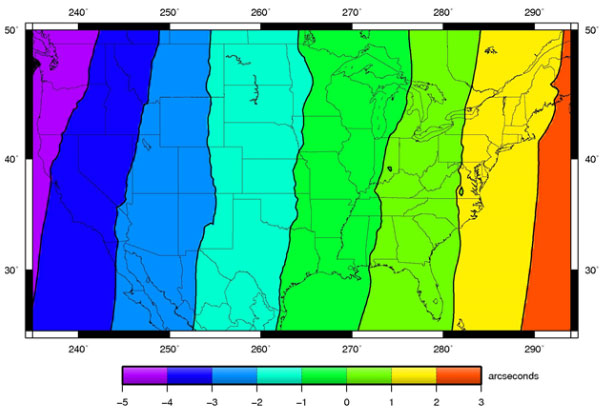

Here’s a picture of the horizontal deviations in the NADCON grid.

Transformations between vertical systems also frequently require a grid.

So what does this have to do with our bug? Well, the way proj gets its grids changed in version 7.

Grid History

Proj grids have always been a bit of an outlier. They are much larger than just the source code is. They are localized in interest (someone in New Zealand probably doesn’t need European grids), not everyone needs all the grids. So historically they were distributed in zip files separately from the code.

This is all well and good, but software packagers wanted to provide a good “works right at install” experience to their end users, so they bundled up the grids into the proj packages.

As more and more people consumed proj via packages and software installers, the fact that the grids were “separate” from proj became invisible to the end users: they just download software and it works.

This was fine while the collection of grids was a manageable size. But it is not manageable any more.

In working through the GDALBarn project to improve proj, Even Roualt decided to find all the grids that various agencies had released for various places. It turns out, there are a lot more grids than proj previously bundled. Gigabytes more.

Grid CDN

Simply distributing the whole collection of grids as a default with proj was not going to work anymore.

So for proj 7, Even proposed moving to a download-on-demand model for proj grids. If a transformation request requires a grid, proj will attempt to download the necessary grid from the internet, and save it in a local cache.

Now everyone can get the very best possible tranformation between system, everywhere on the globe, as long as they are connected to the internet.

Turn It On!

Except… the network grid feature is not turned on by default! So for versions of proj higher than 7, the software ships with no grids, and the software won’t check for grids on the network… until you turn on the feature!

There are three ways to turn it on, I’m going to focus on the PROJ_NETWORK environment variable because it’s easy to toggle. Let’s look at the proj transformation pipeline from our original bug.

projinfo -s EPSG:7405 -t EPSG:4979

The projinfo utility reads out all the possible transformation pipelines, in order of desirability (accuracy) and shows what each step is. Here’s the most desireable pipeline for our transform.

+proj=pipeline

+step +inv +proj=tmerc +lat_0=49 +lon_0=-2 +k=0.9996012717 +x_0=400000

+y_0=-100000 +ellps=airy

+step +proj=hgridshift +grids=uk_os_OSTN15_NTv2_OSGBtoETRS.tif

+step +proj=vgridshift +grids=uk_os_OSGM15_GB.tif +multiplier=1

+step +proj=unitconvert +xy_in=rad +xy_out=deg

+step +proj=axisswap +order=2,1

This transform actually uses two grids! A horizontal and a vertical shift. Let’s run the shift with the network explicitly turned off.

echo 545068 258591 8.51 | PROJ_NETWORK=OFF cs2cs 'EPSG:7405' 'EPSG:4979'

52d12'23.241"N 0d7'17.603"E 8.510

Same as before, and the elevation value is unchanged. Now run with PROJ_NETWORK=ON.

echo 545068 258591 8.51 | PROJ_NETWORK=ON cs2cs 'EPSG:7405' 'EPSG:4979'

52d12'23.288"N 0d7'17.705"E 54.462

Note that the horizontal and vertical results are different with the network, because we now have access to both grids, via the CDN.

No Internet?

If you have no internet, how do you do grid shifted transforms? Well, much like in the old days of proj, you have to manually grab the grids you need. Fortunately there is a utility for that now that makes it very easy: projsync.

You can just download all the files:

Or you can download a subset for your area of concern:

projsync --bbox 2,49,2,49

If you don’t want to turn on network access via the environment variable, you can hunt down the proj.ini file and flip the network = on variable.

22 Jan 2023

Last week, my friend and mentor Mark Sondheim died.

I am writing a little reluctantly, because I ever only knew a fraction of who Mark was. We were professional friends, we met and worked together because of technology and software. While I knew he was a devoted husband and father, I never really knew much of the texture or detail of those relationships, just that they were. So I cannot really celebrate Mark in full; perhaps it is the nature of modern life that it’s hard for anyone to be celebrated that way, but I do want to celebrate the person I knew.

I met Mark in 1997, early in my career, and was very fortunate to do so. By that point Mark was a manager in the BC government, overseeing a number of different projects in the mapping and analysis of resources, and one of those was a watershed analysis project I had managed to worm my way into as a sub-contractor.

It became very clear early on that Mark was interested in the details of what was going on in his projects. He wasn’t a “just get it done” manager, he was a “how is it going, what’s working what’s not, let’s talk about it” manager. He was endlessly curious and constructive, and that was something I tried to model later in my career when I had project teams of my own.

I think Mark’s curiousity manifested itself most in his willingness, his eagerness rather, to attack core problems in our field directly, rather than delegating the problems to a vendor or some other promise-maker. He trusted in the smart people around him, and I am proud to have been one of the smart people he trusted.

For most of the you, Mark’s name will be one you haven’t heard before, but if you work in geospatial technology, you have been touched by his work, because it is woven all through the centre of the open source software you use.

I’ve mentioned this in talks in the past. Mark’s foresight in building the case and finding the funding for the development of JTS lead directly to GEOS which sits inside QGIS and PostGIS. And that was just one example of Mark’s willingness to take a risk to seed innovation in our industry.

Not all or even most of those seeds were open source. Safe Software (which makes our industry’s finest ETL tool, the FME) was born of Mark’s pursuit of a generic data standard, SAIF, that was used in BC for a couple decades. Mark contracted the founders of Safe to build the first SAIF translators and encouraged them to continue their work, which led to the FME and a still-flourishing local company.

Well before I met him, Mark led a team that built an early large scale analysis engine, CAPAMP, that was used for reporting and project work in BC for many years. Reading the acknowledgements Mark wrote, you can see him already working with teams of really smart people, building something detail oriented, not outsourcing risk and innovation, but gathering it close and nurturing it. I think it’s no coincidence that the list of collaborators also includes many of the future leaders of the BC geospatial sector.

I often tried to get Mark to admit the extent of his influence and importance to our industry, but he demurred. He’d point to his own professional models, like his early boss Art Benson, who granted him the freedom to experiment and explore within a government structure that too often defaulted to risk management and timidity. He paid forward his lessons though, and gave that same freedom to the people who worked for him, including me.

For me, that freedom meant being able to propose solutions that didn’t conform to “industry standards” as defined by government, but just worked. Superficially risky things like PostGIS and JTS and the FME that, 15 years after Mark was accepting and encouraging them, are now recognized as the workhorses of our industry.

The first production PostGIS database was run in our office and used as the backing store for another of Mark’s innovative projects, the BC Digital Road Atlas. Mark was PostGIS customer zero. Much of the early development of PostGIS was spurred by the needs of the DRA.

The BC Freshwater Atlas was built with PostGIS and JTS, after Mark turned around a project that was headed nowhere fast. In some ways, the project was a culmination of his earlier bets on PostGIS and JTS. The tools were at hand, because he’d incubated them years earlier. In the grand tradition of government, I don’t think Mark ever got credit for this project, which would have either not delivered, or delivered at 5x the cost if he hadn’t re-imagined it. Mostly he got grief, which he bore with gracious patience.

I don’t think I ever saw Mark get visibly angry. In fact, my first memory of him is an incident in which he was protecting me from the (loud, yelling) anger of his own boss, who felt (correctly, in all honestly) that I hadn’t moved a small project of his forward fast enough. Mark brought the temperature down, even though it was his own boss he was dealing with, and helped us focus on the work, what needed to get done. In the future, we dealt with no end of frustrating or potentially angering problems together, but his default was always calm, and always finding the next step to a solution.

In later years, when we had both left our connections with the provincial government behind, it was still always a pleasure to talk with Mark, because he retained his bottomless curiousity and willingness to honestly share. What was I working on, how was it hard, had I considered this approach? This is what he was thinking about, did I have any thoughts, should he do this? As an intellect, Mark was a friendly giant, he never calcified, and he didn’t arrive with a lot of ego. He was always a pleasure.

I will miss Mark a great deal, and our industry will too, even if mostly it doesn’t realize the debt it owes him. Thank you Mark, for all you shared with me on your journey.

23 Oct 2022

Another year, another IT public accounts summary. I’m finding it hard to tease a story out of this one.

On the one hand, the total has begun going up again, though not so far as to notch a new record.

On the other hand, for the first time in 10 years, the amount of money taken in by ESIT (formerly HP Advanced Systems (formerly EDS)) has plummeted.

Not only has has ESIT revenue gone into free-fall, the beneficiaries appear to be… Maximus and Deloitte? So not so much a reduction in outsourcing to international consultancies, as a reallocation.

Meanwhile, dollars to local firms seem to have flattened out.

What’s the story here? I have no idea. The reduction in dollars to ESIT seems like it might be reflective of an insourcing. The simultaneous increase in dollars to Deloitte and Maximus? I have no idea what might have driven that. In particular Maximus, which should have been swimming upstream after MSP premiums were eliminated.

I will make an effort to bring in the Health Authorities this year and get caught up on that piece of the puzzle, there may be some interesting trends there.

17 Oct 2022

Matt Asay’s piece today about the skills shortage in cloud got me to thinking about what constitutes the required knowledge to work on various projects, and how much implicit knowledge is bound up with any given “single” technology.

Like, what should you know to “do cloud”?

Doing Cloud

Well, “containers” I guess would be a core building block, but what does that imply? Some knowledge of Linux system administration, so you can understand things like the shell and environment. Lots of network administration as well, to understand routing rules and name resolution. Perhaps some package management so you can install some dependencies and build out others. Really, sounds like you need to understand all the things a late 90’s era sysop did. And we haven’t even started in on the actual cloud part yet!

For that you need to understand the abstraction over the actual machines that the container management environment (pick one, pick ten) provides, the configuration system for that environment (declarative? programmatic?), and whatever security/authentication/roles system your cloud uses. Multiply by N for “multi-cloud”.

And that’s just for the container part! Want to get into objects stores (S3/R2) and production deployments? Add in a pretty solid understanding of HTTP, CDN options, DNS and TLS.

Do you have to manage queues? Databases? ML? Each cloud has its own subtly different and variegated offering, and each area has its own unique set of skills you need to master, whether it be DBA concepts or domain languages or security needs.

I can rattle off all these things because I have learned them all to some extent or other over the course of my career, and that’s great but I’m 51 years old. I am constantly amazed that any new graduate can find enough initial purchase to start a career in technology.

Doing PostGIS

Like, want to get involved in PostGIS? Easy, “it’s in C”! Except, well actually we’ve got some C++ bits, but frankly if you know C you probably know C++ too. C and C++ both embed a macro language (CPP) so you need to know that to understand the code.

But wait, you want to build it? Well the build system is autotools. That’s an unholy mixture of M4 and bash shell scripting. Fortunately you can mostly ignore the M4 but bash skills are definitely required.

Naturally you’ll also have to understand Makefile syntax.

Of course PostGIS is a PostgreSQL extension, so to even understand what we’re doing you need to understand SQL. You’ll end up learning the PostgreSQL extension and function APIs by osmosis via the PostGIS code base, but it’s still another thing.

Inevitably you will make a mistake, so you’ll need to understand a debugger, probably gdb or lldb, which are glorious command-line tools with their own terse commandline syntax.

Ooops! Forgot the UNIX commandline! That’s table stakes, really, but there you go.

Also forgot git, which is one of the few tools that is common across most branches of practical modern IT.

The documentation is all in DocBook XML, but you can probably figure that out by inspection.

You probably won’t change the WKT lexer/parser, but that’s in flex/bison. There’s also a protobuf binding that has its own little domain language.

Fixes? Nah.

One of the reasons I don’t do “real javascript” is because doing this exercise with any modern Javascript project involves a similar-but-much-longer litany of completely different tooling from the tooling I already know.

I learned Go relatively recently, and I have to say that even though I did have to, yes, learn all about build and test and debug and modularity for Go, at least there wasn’t a huge pile of options to wade through – the Go community mostly “picked one” for the core tools. (Not true for database connectivity though! You can choose from among multiple PostgreSQL client libraries!)

I don’t know how to end this except to say it feels like my whole career has been spent watching geological layers of technology pack down on each other, layer upon layer upon layer, and that the result seems, in aggregate, completely unapproachable.

I wait for some kind of simplifying moment.

The repeated theme year after year after year has been that “encapsulation” and “abstraction” will at least allow us to ignore the lowest layers. To some extent that is true – I have made it through a whole career without having to learn assembler, and I probably will never need to.

But so many of the other promises have failed to play out.

Object orientation did not result in a new world of stringing together opaque components. The insides still matter intensely and people still have to understand the parts.

Containers have flattened out the need to worry about dependency chains, but the chains are still there inside the containers, and managing the production deployment of containers is now a career defining field in its own right.

An Expanding Universe

It’s possible that most of this apparent exponential complexity explosion is just due to the ever widening scope of what “working with computers” means.

The number of things you can do with computers is just orders of magnitude larger than when I started working with them. So the number of tools is similarly orders of magnitude larger. Dog bites man.

However, I have had the privilege of seeing most of the layers laid down, so I didn’t have to learn them all at once to become productive. Each piece came along in its time, and added a little to the stack.

So, I stand amazed at newly minted technologists who can start, and get productive, in this intellectual garbage tip we call a “profession”. We couldn’t have built a less enjoyable or consistent collection of tools if we had tried, and yet you persevere and exceed all of us. Hats off to all of you!

01 Oct 2022

I had coffee with an IT colleague here in Victoria last week, and he was interested in getting into core PostgreSQL programming. “What resources would you recommend I look at?”

That’s… a hard question!

PostgreSQL is a huge code base with a multi-decade history. I’ve been poking around the edges for almost 10 years and feel comfortable with the extension APIs, foreign data wrappers, access methods APIs, some system catalogue stuff… maybe 5% of the surface area of the beast?

So, what advice for someone who wants to dive much much deeper than that?

First, start with the vision, and read “The Design of Postgres” (Stonebraker & Rowe, 1985) to get a sense of what distinguished Postgres from its predecessors: complex objects; user extensibility; and active database facilities; all while retaining relational concepts.

Second, take a run through the Bruce Momjain’s “internals” presentations. These tend to be a little older, Bruce hasn’t been doing deep core work for a while, but he’s an expert teacher and explainer, so they are useful to get a feel for the shape of things. In a similar (and more recent) vein, my colleague Stephen Frost walks through the code base in this 2018 talk about adding a new feature to PostgreSQL.

Third, consider spending some time with “The Internals of PostgreSQL”. This is a very detailed look at PostgreSQL subsystems, including header structures and data flow. As with any book, it may have already drifted a bit from the particulars of current PostgreSQL, but there is no other resource I know that even attempts to explain internals at this granularity.

Fourth, the source code itself is an amazing resource, and the commentary in header files and function descriptions is very good. The incredibly detailed and stringent source code review process of the PostgreSQL community not only expects good code, but also good documentation of what the code does. I’m not sure how much this can be traced back to the influence of Tom Lane (whose comments are frequently complete technical manuals) but regardless the culture of good internal documentation is in place, and makes the code as “approachable” as a system of this complexity could hope to be.

Now things get harder.

Conference talks are a moving target, because they tend to focus on the leading edge of things, but there are some community members who regularly give talks about their work on core topics, that must necessarily explain how things work in order to contextualize things.

Unfortunately, PostgreSQL conferences have a habit of … not making recordings! So there’s relatively little online. I have seen some amazing talks (the multi-session query planner master class Tom Lane gave at PgCon 2011 sticks out) but most of them are lost to the ages.

The best conference to go to for core technical content is undoubtedly PgCon. (I will see you there this spring!)

COVID robbed us of many things, but it did cause PgCon to record and publish a number of standout technical talks that might otherwise have never been available.

Here’s the presenters I always mark down in my program and rush to get a seat for:

- Andres Freund, who while hammering out incredibly hard technical work still makes time to explain what he is up to.

- Robert Haas, who keeps pushing really important things from his perch as EDB CTO

- Amit Kapila, who is quietly banging out impressive work every release

- Melanie Plageman, who creates jaw droppingly good explanations of really hard topics (the query planner talk blew my mind)

- Peter Geoghegan, who goes right to the foundations and builds things up (unfortunately the btree talk he gave, which was a tour de force, is not online, perhaps this nbtree talk is an acceptable substitute)

I would also go to any talk Tom Lane felt like giving. And Thomas Vondra, and Thomas Munro, and Oleg Bartunov.

Learning the PostgreSQL code base is a journey of a million steps, that’s for sure. One thing that all my effective personal learning has had in common is a practical need. My learning has been in support of some actual work that needed to be done for my employer of the moment. It had motivation, a start point and an end point. That was really helpful.

Best of luck in your PostgreSQL journeys!

Additional Notes

Because of course I left out some stuff in the first draft:

- Once you are at the level of perusing source code, you should also be reading the contents of the pgsql-hackers mailing list, and the current commitfest patches. This will help you understand the culture of contribution, and also get a feel for where work is ongoing.

- One gateway to contribution is patch review. Starting with basic things like “does it compile against main” and “does it do what the author says”. Read the other reviews, see what other reviewers look for, wash rinse repeat. I still think that having your own small patches put through the process is invaluable learning. Doing some review of other people’s patches is a way of “paying it forward” for reviews your own patches receive.

- The amazing Oleg Bartunov wrote in to tell me there is now a second book on PostgreSQL internals, “PostgreSQL 14 Internals. A deep dive into the server mechanics”. Currently in Russian, but an English transition is going on right now.